Water bodies—including oceans, rivers, and lakes—sustain diverse ecosystems and serve as vital arteries for global transportation and trade [1]. Although the use of waterways is essential, it poses significant risks, particularly the threat of oil spills caused by accidents or intentional and potential illegal dumping [2]. These oil spills trigger environmental and economic consequences by impacting aquatic life, coastal communities, and marine ecosystems.

In the last decades, several oil spill incidents have occurred, with the most famous one being the Deepwater Horizon oil spill in the Gulf of Mexico in 2010 [3]. Incidents like this have underscored the need to develop effective systems for detection, localization, and prevention by leveraging the latest advancements in Artificial Intelligence (AI), particularly using remote sensing technologies for imagery analysis.

Leveraging Computer Vision on the field of oil spill detection and localization

In recent years, the ability of computer vision systems to detect and localize not just distinct objects but also more complex regions within visual data have advanced significantly. These capabilities are vital across numerous sectors, enabling automation, enhanced monitoring, and better decision-making systems. As applications grow more specialized, there’s a rising need for domain-specific datasets that reflect the unique characteristics and challenges of particular environments, ensuring that visual recognition systems can perform accurately and reliably in real-world scenarios.

Oil Spill detection and localization

As oil spills, oil emulsions and sheens represent different stages and types of water contamination, their identification is crucial in the field of marine pollution monitoring. Faster response is possible by accurate detection, helping to minimize damage to marine ecosystems, protect coastal communities, and support accountability and legal enforcement. In addition, detecting distinct oil types—such as emulsions or sheens— better informs cleanup strategies and risk assessment, making advanced detection systems essential for sustainable ocean management.

Due to the lack of publicly available datasets related to this domain, PERIVALLON researchers have created an aerial imagery dataset for oil spill detection, classification, and localization. It incorporates both liquid and solid classes and comprises of low-altitude images.

Figure 1 Image samples taken by https://universe.roboflow.com/konstantinos-gkountakos/lados

Benchmark evaluation

For the evaluation of the dataset’s performance, five state-of-the-art models were incorporated, including instance and semantic segmentation approaches. Regarding instance segmentation, YOLOv11[4] was chosen. For the semantic segmentation models DeepLabV3+ [5], SegFormer [6], SETR [7], and Mask2Former [8] were incorporated. Transfer learning was applied to improve the models’ performance by pre-training on versatile datasets and assisting the models in learning broad features and patterns across diverse categories. Furthermore, a second experiment where the models were trained using class weights was conducted, in order to address the issue of class imbalance in the dataset.

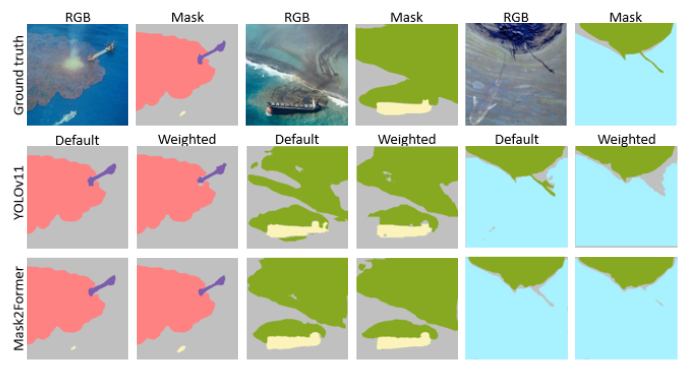

Results indicate that the semantic segmentation models achieve higher performance than the instance segmentation model, especially on the liquid classes. Furthermore, all models perform better when class weights are incorporated during training. Specifically, transformer-based models achieved an overall performance of more than 66% mean Intersection over Union (mIoU), with some liquid classes overcoming 70% IoU. The figure below illustrates three samples from the dataset along with the prediction for every employed model without and with the use of class weights.

Figure 2 Representative image samples, followed by the predicted segmentation masks for each model trained without or with class weights. The color map used is the following: coral-“Emulsion”, purple-“Oil-platform”, yellow-“Ship”, green-“Oil”, cyan-“Sheen”.

[1] Selamoglu, M. The Effects of the Ports and Water Transportation on the Aquatic Ecosystem. Open Access Journal of Biogeneric 514 Science and Research 2021, 10. [2] Lega, M.; Ceglie, D.; Persechino, G.; Ferrara, C.; Napoli, R. Illegal dumping investigation: a new challenge for forensic environmental engineering. WIT Transactions on Ecology and the Environment 2012 [3] Leifer, I.; Lehr, W.J.; Simecek-Beatty, D.; Bradley, E.; Clark, R.; Dennison, P.; Hu, Y.; Matheson, S.; Jones, C.E.; Holt, B.; et al. State of the art satellite and airborne marine oil spill remote sensing: Application to the BP Deepwater Horizon oil spill. Remote Sensing of Environment 2012, 124, 185–209. [4] Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024. arXiv preprint arXiv:2410.17725. [5] Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the Proceedings of the European conference on computer vision (ECCV), 2018, pp. 801–818. [6] Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Advances in neural information processing systems 2021, 34, 12077–12090. [7] Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.; et al. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In Proceedings of the Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2021, pp. 6881–6890. [8] Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention mask transformer for universal image segmentation. In Proceedings of the Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2022, pp. 1290–1299.Written by Konstantinos Gkountakos

CERTH